Tracking gray market activity and transshipment is a complex challenge for brands selling products online. These unauthorized sales channels erode brand value, impact authorized distributors, and can lead to customer dissatisfaction. Artificial Intelligence, specifically advanced language models like Gemini and robust MLOps platforms like Google Cloud's Vertex AI, offers powerful capabilities to automate detection, analysis, and prediction of such activities.

This blog post details how these AI tools can be leveraged to create a comprehensive gray market tracking system for popular e-commerce sites.

The AI Advantage in Tracking Gray Market Activity

Traditional methods of tracking gray market activity are often manual, slow, and reactive. AI brings:

Scalability: Monitor millions of product listings across countless e-commerce sites simultaneously.

Speed: Identify emerging threats in near real-time.

Accuracy: Reduce false positives and focus human effort on genuine threats.

Predictive Power: Anticipate where and when gray market activities might emerge.

Multimodal Analysis: Process text, images, and structured data for deeper insights.

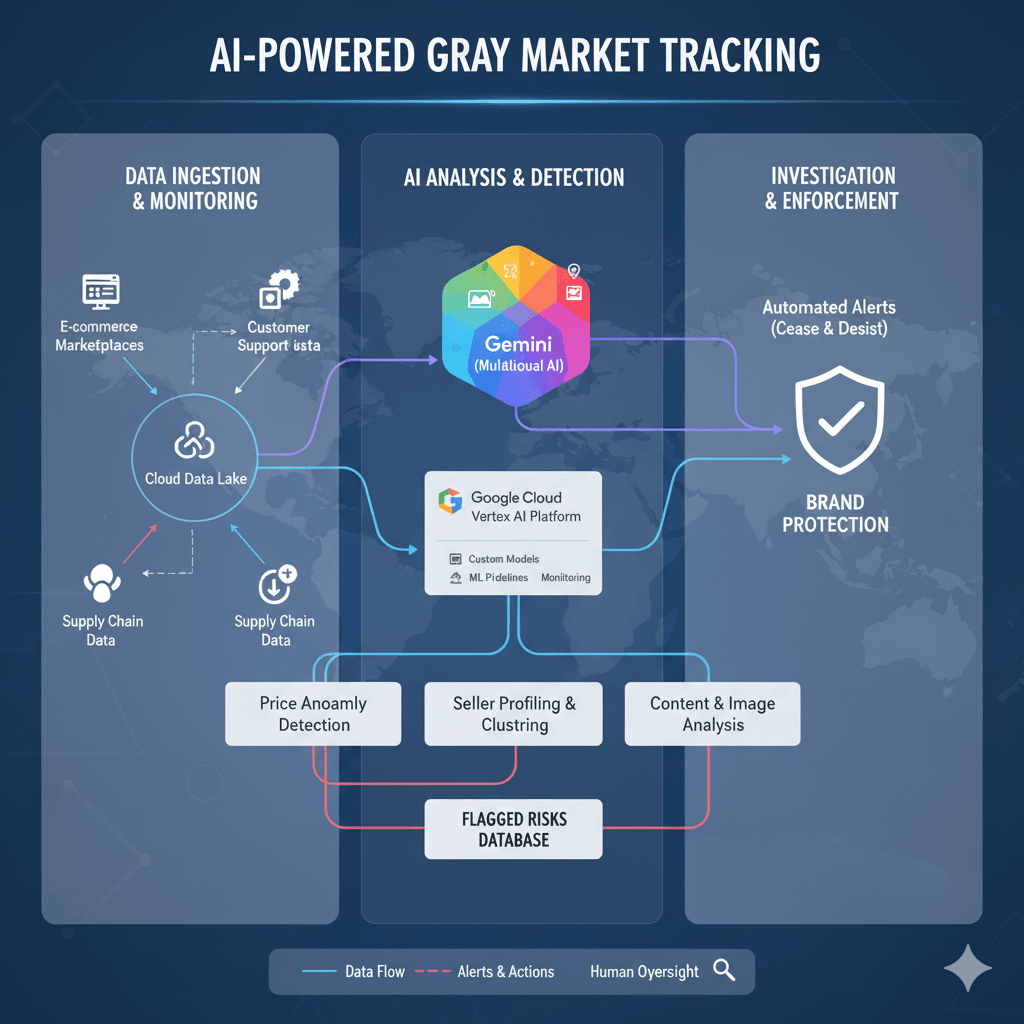

Architecture Overview: Gemini and Vertex AI for Gray Market Tracking

Our solution leverages Google Cloud services, with Vertex AI as the central platform for MLOps and Gemini providing advanced language understanding and multimodal analysis.

Phase 1: Data Ingestion & Monitoring

The foundation of any AI solution is robust data. We need to continuously collect data from various sources.

1. E-commerce Marketplace Scraping: This involves programmatically collecting product listing data (titles, descriptions, prices, seller info, images, URLs, shipping origins) from major e-commerce platforms like Amazon, eBay, Alibaba, regional marketplaces, and even social commerce sites.

Tools: Custom Python scripts (using libraries like

BeautifulSoup,Selenium,Scrapy), or third-party web scraping services.Storage: Ingested data is stored in a Google Cloud Storage (GCS) bucket, then moved to BigQuery for structured querying and analysis.

Pseudocode for Data Ingestion:

Python

def scrape_ecommerce_site(site_url, product_keywords):

listings = []

# Use a scraping library (e.g., Scrapy, Selenium) to navigate and extract data

for keyword in product_keywords:

search_results = fetch_search_results(site_url, keyword)

for result in search_results:

listing_data = extract_listing_details(result) # Title, price, seller, description, image_url, shipping_origin

listings.append(listing_data)

return listings

def store_to_bigquery(data, table_id):

# Authenticate and insert data into BigQuery

# Example: client.insert_rows_json(table_id, data)

pass

# Main ingestion loop

while True:

all_listings = []

for site, keywords in CONFIG_SITES_AND_KEYWORDS.items():

listings = scrape_ecommerce_site(site, keywords)

all_listings.extend(listings)

store_to_bigquery(all_listings, "ecommerce_listings_raw")

time.sleep(SCRAPING_INTERVAL) # e.g., daily

2. Internal Data Integration: Integrate your own data, such as:

Authorized Seller List: A definitive list of all legitimate distributors and retailers.

MAP (Minimum Advertised Price) Policies: Current pricing guidelines for your products.

Product Master Data: SKUs, descriptions, images, regional variations.

Customer Support Logs: Text data from customer inquiries that might indicate gray market purchases (e.g., warranty issues, non-standard packaging complaints).

Storage: Also stored in BigQuery for unified querying.

Phase 2: AI Analysis & Detection (Vertex AI & Gemini)

This is the core of the AI solution, where raw data is transformed into actionable insights. Vertex AI provides the managed infrastructure for training, deploying, and monitoring custom machine learning models, while Gemini brings advanced multimodal understanding.

A. Price Anomaly Detection (Vertex AI Custom Models)

Goal: Identify listings with prices significantly deviating from expected MAP or market averages, a key indicator of gray market activity.

Methodology: Train a regression model or use statistical anomaly detection algorithms (e.g., Isolation Forest, IQR method) on historical price data.

Pseudocode for Price Anomaly Detection (Python on Vertex AI Notebooks):

Python

import pandas as pd

from sklearn.ensemble import IsolationForest

from google.cloud import bigquery

def detect_price_anomalies():

client = bigquery.Client()

query = """

SELECT product_sku, price, listed_date

FROM ecommerce_listings_raw

WHERE listed_date >= DATE_SUB(CURRENT_DATE(), INTERVAL 30 DAY)

"""

df = client.query(query).to_dataframe()

anomalies = []

for sku in df['product_sku'].unique():

sku_df = df[df['product_sku'] == sku].copy()

if len(sku_df) < 2: continue # Not enough data for anomaly detection

# Train Isolation Forest model

model = IsolationForest(contamination=0.05) # 5% assumed anomalies

sku_df['price_anomaly'] = model.fit_predict(sku_df[['price']])

# Flag anomalies (-1 indicates an anomaly)

anomalous_listings = sku_df[sku_df['price_anomaly'] == -1]

for _, row in anomalous_listings.iterrows():

anomalies.append({

"product_sku": row["product_sku"],

"price": row["price"],

"reason": "Price anomaly detected by Isolation Forest"

})

return anomalies

# Deploy this as a scheduled job on Vertex AI Workbench/Pipelines

B. Seller Profiling & Clustering (Vertex AI Custom Models & Gemini)

Goal: Identify unknown or suspicious sellers. Group similar unauthorized sellers to uncover networks.

Methodology:

Feature Engineering: Extract features from seller names, historical sales, number of listings, ratings, shipping locations, etc.

Clustering: Use algorithms like K-Means or DBSCAN to group sellers. New sellers not fitting into authorized clusters are flagged.

Gemini for Seller Bio Analysis: Use Gemini to understand unstructured text in seller profiles (if available) or even forum discussions about sellers.

Pseudocode for Seller Profiling (Python on Vertex AI):

Python

from sklearn.cluster import DBSCAN

from sklearn.preprocessing import StandardScaler

from google.cloud import bigquery

def profile_sellers():

client = bigquery.Client()

query = """

SELECT seller_id, seller_name, AVG(rating) as avg_rating, COUNT(listing_id) as num_listings,

STRING_AGG(DISTINCT shipping_origin) as unique_origins,

# Placeholder for 'is_authorized' from internal data

(CASE WHEN seller_id IN (SELECT authorized_id FROM authorized_sellers) THEN 1 ELSE 0 END) as is_authorized

FROM ecommerce_listings_raw

GROUP BY seller_id, seller_name

"""

df = client.query(query).to_dataframe()

# Feature engineering for clustering (e.g., encoding categorical, scaling numerical)

features = pd.get_dummies(df['unique_origins'], prefix='origin')

features['avg_rating'] = StandardScaler().fit_transform(df[['avg_rating']])

features['num_listings'] = StandardScaler().fit_transform(df[['num_listings']])

# Use DBSCAN for clustering - good for finding arbitrary shaped clusters and outliers

dbscan = DBSCAN(eps=0.5, min_samples=5) # Tune parameters

df['cluster'] = dbscan.fit_predict(features)

# Flag sellers that are not authorized and are in outlier clusters (-1)

suspicious_sellers = df[(df['is_authorized'] == 0) & (df['cluster'] == -1)]

return suspicious_sellers[['seller_id', 'seller_name', 'reason']]

# Use Gemini for analyzing seller descriptions or customer review comments about sellers

def analyze_seller_text_with_gemini(text_data):

# Here, 'gemini_model' represents an instantiated Gemini client

response = gemini_model.generate_content(

f"Analyze this seller description/customer comment for potential gray market indicators: {text_data}. Is there anything suspicious about their operations, product sourcing, or customer service that suggests they might be an unauthorized seller or selling diverted goods? Respond with a 'YES' or 'NO' and a brief explanation."

)

return response.text

# Integrate this into the seller profiling pipeline

C. Content & Image Analysis (Gemini Multimodal)

Goal: Detect subtle indicators in listing text and images that point to unauthorized sales or transshipment.

Methodology:

Text Analysis (Gemini):

Language Mismatch: Identify listings for a product typically sold in one region but described in a different regional language (e.g., a product meant for the US market described in Japanese on an eBay listing).

Keyword Detection: Look for terms like "international version," "no warranty," "imported," "bulk buy," or misspellings of brand names.

Sentiment Analysis: Analyze customer reviews for patterns indicative of gray market issues (e.g., complaints about lack of local support, missing components, different packaging).

Image Analysis (Gemini):

Packaging Discrepancies: Compare product images against known authorized packaging for different regions.

Regional Specifics: Look for specific regional labels, certifications, or plug types that don't match the listed shipping origin or intended market.

Counterfeit Indicators: While primarily for counterfeits, Gemini can help flag low-quality images or inconsistent branding that might indicate unauthorized products.

OCR (Optical Character Recognition): Extract text from images (e.g., serial numbers, manufacturing dates, warning labels) for cross-verification.

Pseudocode for Multimodal Analysis with Gemini:

Python

from google.cloud import storage

# Assuming you have a Gemini client initialized (e.g., google.generativeai.GenerativeModel)

def analyze_listing_with_gemini(listing_text, image_uri):

# Fetch image bytes from GCS

storage_client = storage.Client()

bucket_name = image_uri.split('/')[2]

blob_name = '/'.join(image_uri.split('/')[3:])

blob = storage_client.bucket(bucket_name).blob(blob_name)

image_bytes = blob.download_as_bytes()

# Prepare parts for multimodal input

image_part = {

"inline_data": {

"mime_type": "image/jpeg", # Or image/png

"data": base64.b64encode(image_bytes).decode('utf-8')

}

}

text_part = {

"text_content": f"Analyze this product listing text and image for signs of gray market activity or transshipment. Pay attention to language, packaging, regional identifiers, and any suspicious descriptions. Listing text: '{listing_text}'"

}

# Use Gemini's multimodal capability

response = gemini_model.generate_content(

contents=[text_part, image_part],

safety_settings=YOUR_SAFETY_SETTINGS # Ensure appropriate safety settings

)

return response.text

# Example usage within a data processing pipeline

def process_new_listing_for_gemini_analysis(listing_id, description_text, image_url):

gemini_output = analyze_listing_with_gemini(description_text, image_url)

if "suspicious" in gemini_output.lower() or "gray market" in gemini_output.lower():

# Store detailed output and flag for review

return {"listing_id": listing_id, "gemini_analysis": gemini_output, "flagged": True}

return {"listing_id": listing_id, "flagged": False}

D. Fraud & Risk Scoring (Vertex AI Prediction)

Goal: Combine all detected signals into a single risk score for each listing/seller.

Methodology: Train a classification model (e.g., Random Forest, XGBoost) on historical data of known gray market listings vs. legitimate ones. Features would include price anomalies, seller profile characteristics, and Gemini's output scores.

Pseudocode for Risk Scoring (Python on Vertex AI):

Python

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier

from google.cloud import bigquery

def train_risk_model():

client = bigquery.Client()

# Assume a BigQuery table 'flagged_listings' where human reviewers have labeled

# listings as 'gray_market' or 'legitimate'

query = """

SELECT

price_anomaly_score,

seller_cluster_outlier_score,

gemini_gray_market_indicator_score, # numerical output from Gemini analysis

listing_has_misspelling,

shipping_origin_mismatch,

is_gray_market_label # True/False from human review

FROM features_for_risk_scoring

"""

df = client.query(query).to_dataframe()

X = df.drop('is_gray_market_label', axis=1)

y = df['is_gray_market_label']

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

model = RandomForestClassifier(n_estimators=100, random_state=42)

model.fit(X_train, y_train)

# Save and deploy the model to Vertex AI Endpoints

# Example: model.save("gs://your-bucket/model"), then deploy via Vertex AI SDK

return model

def predict_risk_score(new_listing_features, deployed_model_endpoint):

# Call the deployed model on Vertex AI Endpoint for real-time prediction

# predictions = deployed_model_endpoint.predict(new_listing_features)

return predictions['gray_market_probability']

Phase 3: Investigation & Enforcement

The AI system's output needs to be integrated into existing brand protection workflows.

Automated Alerts & Dashboards:

Tools: Google Cloud Pub/Sub for real-time notifications, Looker Studio (formerly Data Studio) or Grafana for interactive dashboards.

Action: When a listing exceeds a certain risk score, an alert is triggered (e.g., email, Slack notification).

Case Management System Integration:

Tools: Integrate with internal CRM or specialized brand protection software.

Action: Automatically create a case for flagged listings, pre-populating it with all relevant data (listing URL, seller details, detected anomalies, Gemini analysis summary, risk score).

Human Review & Test Buys:

Action: Human analysts prioritize high-risk cases. They conduct manual verification, potentially performing test purchases to gather physical evidence (serial numbers, packaging, etc.).

Enforcement Actions:

Automated Cease & Desist (C&D) Letters: For clear violations, template-based C&D letters can be auto-generated for review.

Marketplace Takedowns: File official infringement complaints with e-commerce platforms using the collected evidence.

Legal Action: For persistent or large-scale infringers, escalate to legal counsel.

Benefits of this AI-Powered Approach

Proactive Detection: Move from reactive to proactive brand protection.

Reduced Manual Effort: Automate repetitive tasks, freeing up human analysts for complex investigations.

Enhanced Accuracy: AI's ability to process vast amounts of data leads to more precise identification.

Data-Driven Decisions: Gain deep insights into gray market patterns, common leak points, and effective enforcement strategies.

Scalable Solution: Easily adapt to growing product lines and expanding e-commerce landscapes.

Conclusion

Leveraging the power of Gemini's advanced multimodal AI capabilities and Vertex AI's robust MLOps platform, brands can build a sophisticated and highly effective system to track and combat transshipment and gray market activities on popular e-commerce sites. This not only protects revenue but also safeguards brand reputation and ensures a consistent customer experience. By embracing AI, brands can stay ahead in the dynamic world of online commerce.