Back when I worked third shift at a bustling distribution center, it always amazed me how a single equipment breakdown could create domino chaos for hours. That was before I saw firsthand what layers of smart AI could do: suddenly, delays and confusion became rare, and work felt almost intuitive—like the warehouse could 'see' around every corner. Today, it's not science fiction. Let's dive into the 5-layer AI framework that makes future-proof warehouse optimization possible, sprinkling in lessons from crowded aisles, bad coffee, and those unforgettable nights when innovation just made life easier.

Blind Spots, Rigid Rules, and the Real Cost of Stalled Warehouses

In my years working with warehouse management systems, I’ve seen firsthand how quickly a smooth operation can unravel. Traditional WMS platforms are built to log transactions and enforce standard processes, but they struggle when faced with the unpredictable nature of real-world warehouse operations. The truth is, most legacy systems are reactive—they follow rigid “if-then” rules, which creates dangerous blind spots and costly bottlenecks.

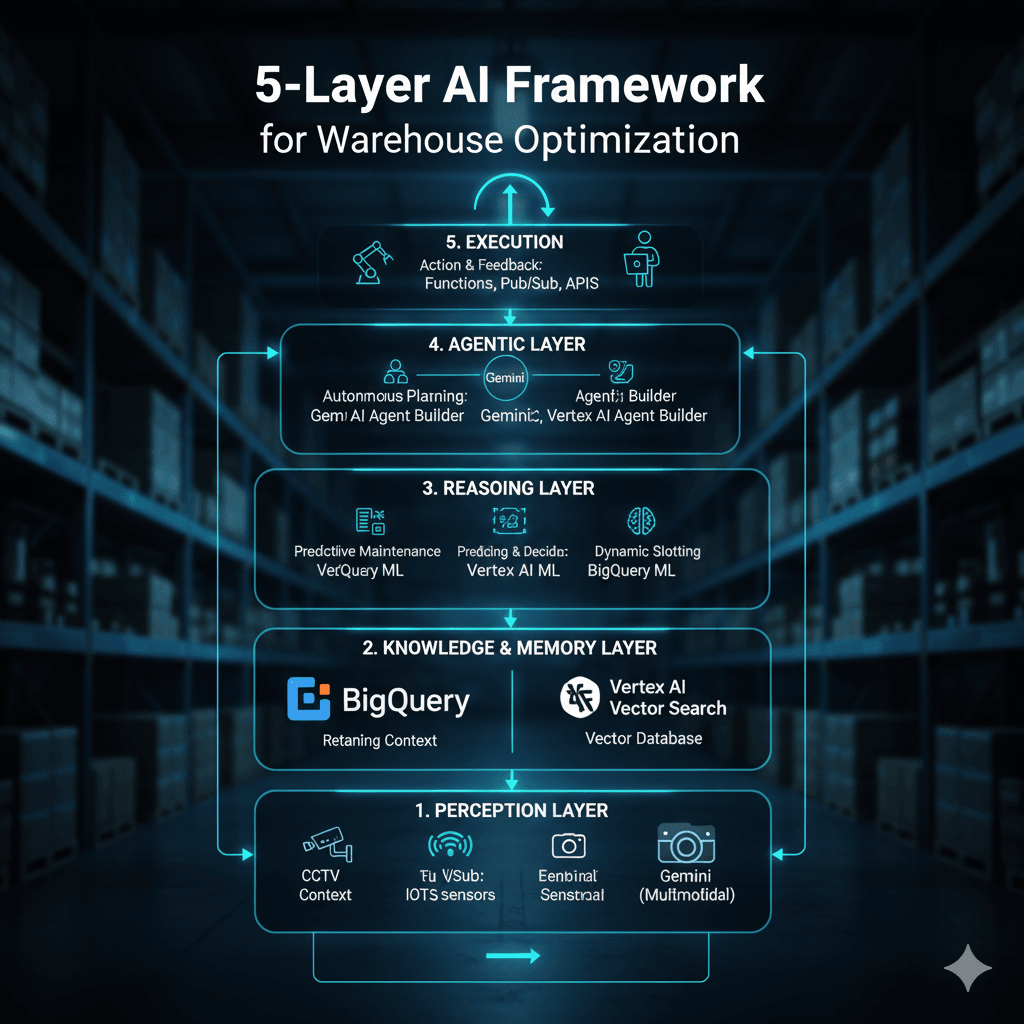

The 5-Layer AI Framework: A Technical Blueprint for Warehouse Optimization

The future of warehousing is not a better WMS; it’s an Agentic AI Framework that can perceive, reason, and act autonomously. This blueprint details a five-layer architecture, anchored by Google Cloud's Gemini and Vertex AI, turning your operational data into prescriptive intelligence.

Layer 1: The Perception Layer – Ingestion and Multimodal Awareness 👁️

This layer is the nervous system, aggregating and digitizing all real-world events. It transforms raw sensor data, log files, and visual feeds into structured data streams.

Key Tools: Google Cloud Pub/Sub, Vertex AI Vision, Gemini API

Use Case | AI Tool | Code Focus |

Real-Time Data Stream | Cloud Pub/Sub | Ingesting scanner and IoT data for immediate processing. |

Multimodal Inbound QA | Gemini Pro (via Vertex AI) | Analyzing a photo of damaged goods alongside the receiving clerk's text notes. |

Code Example: Processing a Multimodal Damage Report (Python)

This snippet shows how Gemini processes an image and text to classify the damage and suggest an action (a core capability of the Perception Layer).

Python

# Function to classify damage using Gemini Pro via the Vertex AI SDK

def analyze_damage_report(image_uri, text_description):

"""Classifies damage severity from an image and text."""

# 1. Initialize the client and load the image

from google import auth

from vertexai.preview.generative_models import GenerativeModel, Part

# Assuming 'gs://your-bucket/damage_report.png'

image = Part.from_uri(image_uri, mime_type="image/png")

# 2. Construct the multimodal prompt

prompt = f"""

Analyze the image and the following text.

Classify the damage severity (CRITICAL, MODERATE, LOW).

Suggest a next step (REJECT_SHIPMENT, ISOLATE_FOR_QA, ACCEPT_WITH_NOTE).

Text Description from Clerk: "{text_description}"

"""

# 3. Call the Gemini model

model = GenerativeModel("gemini-2.5-flash")

response = model.generate_content(

contents=[image, prompt],

config={"response_mime_type": "application/json"} # Request structured output

)

# 4. Return structured output for Layer 2 ingestion

return response.text

# Example execution:

# analysis = analyze_damage_report(

# image_uri="gs://warehouse-data/pallet_a123.jpg",

# text_description="Pallet shifted, 3 boxes crushed on one corner. Product visible."

# )

# print(analysis)

# Expected structured output (Layer 2 then processes this JSON):

# { "severity": "CRITICAL", "action": "REJECT_SHIPMENT", "reason": "Structural integrity compromised." }

Layer 2: The Knowledge & Memory Layer – Storing Context for RAG 🧠

This layer ensures the AI has rich, searchable context, serving as the central "memory." It uses a hybrid approach: BigQuery for structured facts and a Vector Database for the semantic meaning of unstructured documents.

Key Tools: BigQuery, BigQuery Vector Search (or Vertex AI Vector Search)

Use Case | AI Tool | Code Focus |

Transactional Data | BigQuery | The core data warehouse for all WMS/ERP facts. |

Semantic Search | BigQuery ML and Vector Search | Converting manuals into vectors to power contextual answers (RAG). |

Code Example: Generating Embeddings and Vector Search (SQL/BigQuery ML)

This BQML snippet generates vector embeddings for a table of maintenance logs, making them semantically searchable.

SQL

-- 1. Create a remote connection to Vertex AI to access the embedding model

CREATE OR REPLACE MODEL

`warehouse_memory.log_embedding_model`

REMOTE WITH CONNECTION `us-central1.vertex_ai_conn`

OPTIONS (ENDPOINT = 'text-embedding-004');

-- 2. Generate embeddings for the maintenance log text

CREATE OR REPLACE TABLE

`warehouse_memory.maintenance_embeddings` AS

SELECT

log_id,

log_text,

ML.GENERATE_TEXT_EMBEDDING(

MODEL `warehouse_memory.log_embedding_model`,

STRUCT(log_text AS content)

) AS embedding

FROM

`warehouse_raw_data.maintenance_logs`

WHERE log_text IS NOT NULL;

-- 3. Create a vector index for fast semantic lookups (Optional, but highly recommended)

CREATE VECTOR INDEX maintenance_index

ON `warehouse_memory.maintenance_embeddings`(embedding)

OPTIONS(index_type='IVF', distance_type='COSINE');

-- This index now allows Layer 4 (the Agent) to ask:

-- "Which past log entry is most similar to the current conveyor belt error code 'E-404'?"

Layer 3: The Reasoning Layer – Predictive ML Framework 💡

The Reasoning Layer consumes the structured and vector data from Layer 2 to execute predictive models. It runs the core Classification (What will happen?) and Regression (How much/when?) tasks.

Key Tools: Vertex AI Workbench, AutoML, BigQuery ML

Use Case | AI Tool | Code Focus |

Predictive Maintenance | Vertex AI (AutoML) | Binary Classification to predict equipment failure (Yes/No). |

Labor Forecasting | Vertex AI (Custom Training/BQML) | Regression to predict total picking hours needed tomorrow. |

Code Example: Training a Predictive Maintenance Model (Vertex AI SDK)

This demonstrates the Python SDK approach for a structured prediction model (Classification).

Python

# Python snippet using Vertex AI SDK for training a Classification model

from google.cloud import aiplatform

PROJECT_ID = "your-gcp-project"

REGION = "us-central1"

DATASET_ID = "bq_dataset_name.maintenance_data" # BigQuery table

TARGET_COLUMN = "failed_in_next_72h" # The Binary target (0 or 1)

# Initialize the Vertex AI client

aiplatform.init(project=PROJECT_ID, location=REGION)

# 1. Define the BigQuery source

dataset = aiplatform.TabularDataset.create(

display_name="Maintenance_Failure_Predictor",

bq_source=f"bq://{DATASET_ID}"

)

# 2. Define the AutoML training job for Binary Classification

job = aiplatform.AutoMlTabularTrainingJob(

display_name="Maintenance_Classifier_Job",

optimization_prediction_type="classification",

target_column=TARGET_COLUMN,

# The rest of the configuration (training hours, feature engineering etc.)

)

# 3. Run the training job

# The result is a deployed model ready for prediction in Layer 4

# model = job.run(dataset=dataset, ...)

# print(f"Training job started: {job.resource_name}")

Layer 4: The Agentic Layer – Autonomous Decision-Making 🤖

This is the control layer. When an anomaly is detected (e.g., a Prediction from Layer 3 or an Alert from Layer 1), the AI Agent uses Gemini's reasoning to determine the optimal multi-step response using its available tools.

Key Tools: Gemini, Vertex AI Agent Builder, Function Calling

Use Case | AI Tool | Code Focus |

Orchestration | Gemini's Function Calling | Deciding which internal "tools" (APIs) to call and in what order based on a complex prompt/situation. |

Real-Time Pathing | Gemini | Re-routing a picker dynamically due to floor congestion. |

Code Example: Agent Reasoning and Tool-Use (Gemini Function Calling)

This agent uses the dispatch_amr tool to react to a predicted stockout and then a notify_wms tool to update the system.

Python

# 1. Define the available tools (internal API calls) as Python functions

def dispatch_amr(source_location: str, destination_location: str, priority: str):

"""Dispatches an Autonomous Mobile Robot (AMR) for inventory movement."""

# This simulates calling an external AMR control system API

# print(f"API Call: Dispatching AMR from {source_location} to {destination_location} with {priority} priority.")

return {"status": "AMR_DISPATCHED", "task_id": "T-4567"}

def notify_wms(task_type: str, details: str):

"""Creates a high-priority task in the Warehouse Management System (WMS)."""

# print(f"API Call: Notifying WMS: Type={task_type}, Details={details}")

return {"status": "WMS_TASK_CREATED"}

# 2. Tell Gemini what tools it has access to

tools = [dispatch_amr, notify_wms]

# 3. Create the prompt based on a predictive alert from Layer 3

alert_prompt = f"""

CRITICAL ALERT: Layer 3 predicted a 90% chance of stockout for SKU 'X-500' in pickface A12.

Current inventory in reserve is at location RACK-10.

I need you to immediately dispatch an AMR to move 5 cases from RACK-10 to A12, and then log a high-priority replenishment task in the WMS.

"""

# 4. Agent Execution (Gemini decides the steps)

# response = model.generate_content(

# contents=[alert_prompt],

# tools=tools

# )

# Example of Gemini's thought process (the actual response):

# response.function_calls -> [

# {"name": "dispatch_amr", "args": {"source_location": "RACK-10", "destination_location": "A12", "priority": "HIGH"}},

# {"name": "notify_wms", "args": {"task_type": "REPLENISHMENT", "details": "Auto-dispatched AMR for SKU X-500."}}

# ]

# The Agentic Layer executes these calls sequentially.

Layer 5: The Execution Layer – Action and The Continuous Loop 🔄

This final layer executes the API calls from Layer 4 and, crucially, captures the real-world outcome, ensuring a feedback loop for continuous ML model improvement. This is where the Gen AI capability of providing natural language context for every action is realized.

Key Tools: Cloud Functions, Gemini, Pub/Sub

Use Case | AI Tool | Code Focus |

Action & API Calls | Cloud Functions/Cloud Run | Securely executing the Agent's decision (calling the AMR/WMS APIs). |

Feedback Loop | Pub/Sub & BigQuery | Ingesting the outcome metric (e.g., time taken) for model retraining. |

Code Example: Generating a Natural Language Summary (GenAI)

After the Agent (Layer 4) executes its tools, the Execution Layer uses GenAI to generate a clear, human-readable summary of the complex automated action.

Python

# Pseudocode for the final logging step after execution

def generate_summary(task_id, actions_taken, outcome_metric):

"""Uses Gemini to summarize the complex automation for human review."""

# 1. Construct the prompt with all execution details

prompt = f"""

A warehouse agent performed a complex, automated action.

Summarize the event for a floor supervisor in under 100 words.

Task ID: {task_id}

Actions Taken: {actions_taken}

Outcome: The task took {outcome_metric} seconds to complete, which is 15% faster than average.

"""

# 2. Call Gemini

# model = GenerativeModel("gemini-2.5-flash")

# summary_response = model.generate_content(prompt)

# Example Summary (Gen AI Output):

# "Automated Stockout Prevention Protocol (T-4567) was successfully executed. An AMR was immediately dispatched from RACK-10 to the A12 pickface, completing the replenishment 15% faster than the typical manual process. Inventory risk for SKU X-500 is now mitigated."

# 3. Log the summary and the outcome_metric to BigQuery for model tuning.

# This closes the loop for continuous learning!

pass